Why blocking GPTBot & other AI web crawlers is a bad move

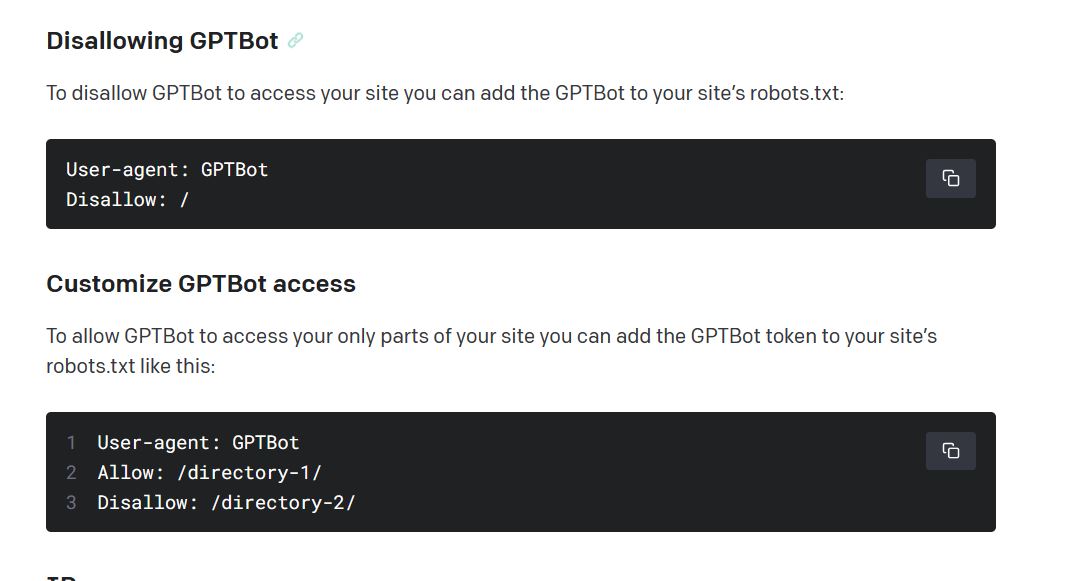

OpenAI announced its support for robots.txt for GPTBot, which means you can block OpenAI from harvesting your website content.

As Search Engine Optimisation specialists know, the practice is already available for years for search engines, some SEO tools and other popular web crawlers & scrapers – however, not many websites make good use of it.

Today, SEO specialists advise blocking GPTBot and other AI web scrapers (probably a better definition than crawlers, normally associated with search engine spiders=.

However, blocking GPTBot for an entire web domain is a wrong move.

What counts for a brand is not website traffic but information persuasion.

Blocking AI bots will prevent owned brand narrative visibility on tomorrow’s knowledge sources (conversational chatbots are tomorrow’s search engines).

You can still limit its access, but it’s not advised to prevent it from the entire website.

A new search paradigm is coming

Hopefully, the upcoming switch from smart yellow pages (Google) to smart advisors (AI-powered conversational chatbots) will make the SERP (Search Engine Result Page) ranking race a thing of the past.

The digital marketing industry should get rid of grey SEO tactics aimed at tricking algorithms and moving towards meaningful information sharing.

The Generative AI tools in search seen so far (Bing, You, Google SGE) seem to need to be more capable of replacing ‘traditional’ search features.

However, what can be called ‘traditional’ nowadays? SERPs are already full of features where AI plays a role. Beyond (smart) search ads, think of rich snippets, comparisons, answers, custom/local info and so on.

Many more valuable and attractive features already push down organic search results.

AI summarises the most relevant findings from various sources into one snippet rather than separately listing findings for each source.

A way to gain fast adoption by AI-powered conversational search features is to dynamically prompt long questions according to user queries, keeping in mind topical search history – timing is a crucial issue since the user’s search intention changes according to the funnel stage determined by search queries.

Search engines algorithms transformed the web into a wasteland of mediocrity

The current search engine ranking criteria transformed the web into a wasteland of SEO-generated mediocrity.

Most of the so-called reputable media industry ended up in poor clickbait techniques, elegant fake news, surrounded by tons of annoying ads, and often offered behind paywalls.

Let’s be honest: no organisation is fully honest, starting from news factories, usually pushing specific agendas according to their investors, advertisers, positions, etc.

Single voices pursue their interests. Only crowdsourced information can be considered valid since it brings many voices to the storytelling process.

Therefore answers based on multiple sources tend to be more valid than answers based on single sources.

However, the most visible and valuable content tends to be non-promotional, crowd-sourced, rich, and updated, moved by altruism rather than purely promotional goals – think of Wikipedia.

Quality > quantity always prevails in business, at least in the medium/long term. Short-term victories won’t assure business sustainability.

Nowadays, capitalism started to delegate marketing efforts to customers by rewarding evangelists/early adopters, a form of religious missionaries – think of Apple or Tesla fanatics.

Reviews boosted by digital platforms are powerful profit drivers – word of mouth still being the most effective marketing channel.

However, we all know how fake reviews (like fake news) affect their validity.

And besides that, ‘the wisdom of the crowd’ is not that wise after all, considering, for example, election results massively fueled by crap propaganda worldwide.

So AI tools might be a better way to select and interpret valid content, moving beyond biased content, either mass social proof or SEO-driven content.

AI tools can help get rid of all those pages built with the sole purpose of leading SERPs.

Digital platforms’ key goal is to keep users in there

Before any sales, interactions, or persuasion, digital platforms are eager to eat your most valuable asset: time.

Time is consumption, and consumption makes advertising spaces, aka income opportunities.

It doesn’t count the absolute number of profiles (or rather, of users, considering that a user can have multiple profiles) but the time that users spend on the platform, even before how they spend it.

To all supposed SEO professionals scared by the growing amount of AI tools’ web scrapers, it’s worth remembering that a vital goal of any digital platform (social networks and search engines) is to keep users within the platform.

Letting a user move away from a platform is a cost that ads must compensate.

For websites, getting traffic for free will be more challenging when solutions offered by search platforms (including social networks) will be richer and smoother.

Skipping search platforms means missing the marketplaces where users spend most of their time.

Many businesses won’t need a rich website (which represents a considerable cost to be perfect in terms of content, design, SEO, UX, hosting, security, integrations, translations, etc.) where they can feed a global platform with their feeds via API, focusing on core elements of their business: product/service (including pricing) & CX. Their core assets, audiences, will be based on primary data since pixels will also be a thing of the past.

From SEO to AIO

As I wrote some time ago, AIO (Artificial Intelligence Optimisation) will be the new SEO. If you want to skip the race, block AI web scrapers, and your brand-owned narrative won’t be mentioned anymore. But others can still talk about your brand, preventing you from any control over it when popping up in AI-powered conversational chatbots.