The News We See: How Facebook Fuels Political Polarization

Research published by Science reveals ideological “echo chambers” on social media

Facebook (Meta) has transformed how we get news and information.

According to self-proclaims by Meta Inc., over two billion users and counting – or better profiles, including probably a half of fakes, duplicated, bots, scammers, deads, banned… For sure, Facebook is the largest social media network worldwide. Considering the average type of current user – a boomer functional illiterate easy catch for propaganda machines of any kind – the USA-based network founded and still owned by Mark Zuckerberg greatly impacts election results worldwide.

The new anti-Twitter – or better, anti-X – network, Threads, has been a huge flop – 100 million users the first week after launch, and over 50% lost the week after. Two records – despite technically they’re not new users but rather existing users of another network, Instagram, that activated the Threads extension.

Considering the Metaverse flop, the recent introduction of paid verified profiles to provide users’ assistance – otherwise totally absent – and paid plans to watch creators’ content is not a good time for Meta. It seems more of a desperate attempt to drain the drop in advertising revenue due to poor performance amplified after the iOS14 conversion API block for privacy (mainly business) reasons.

Beyond such technical and business sides, a major question concerning Facebook’s rise among users and policymakers: is it dividing us politically?

A major new study published by Science in July 2023, ‘Asymmetric ideological segregation in exposure to political news on Facebook’, suggests it is.

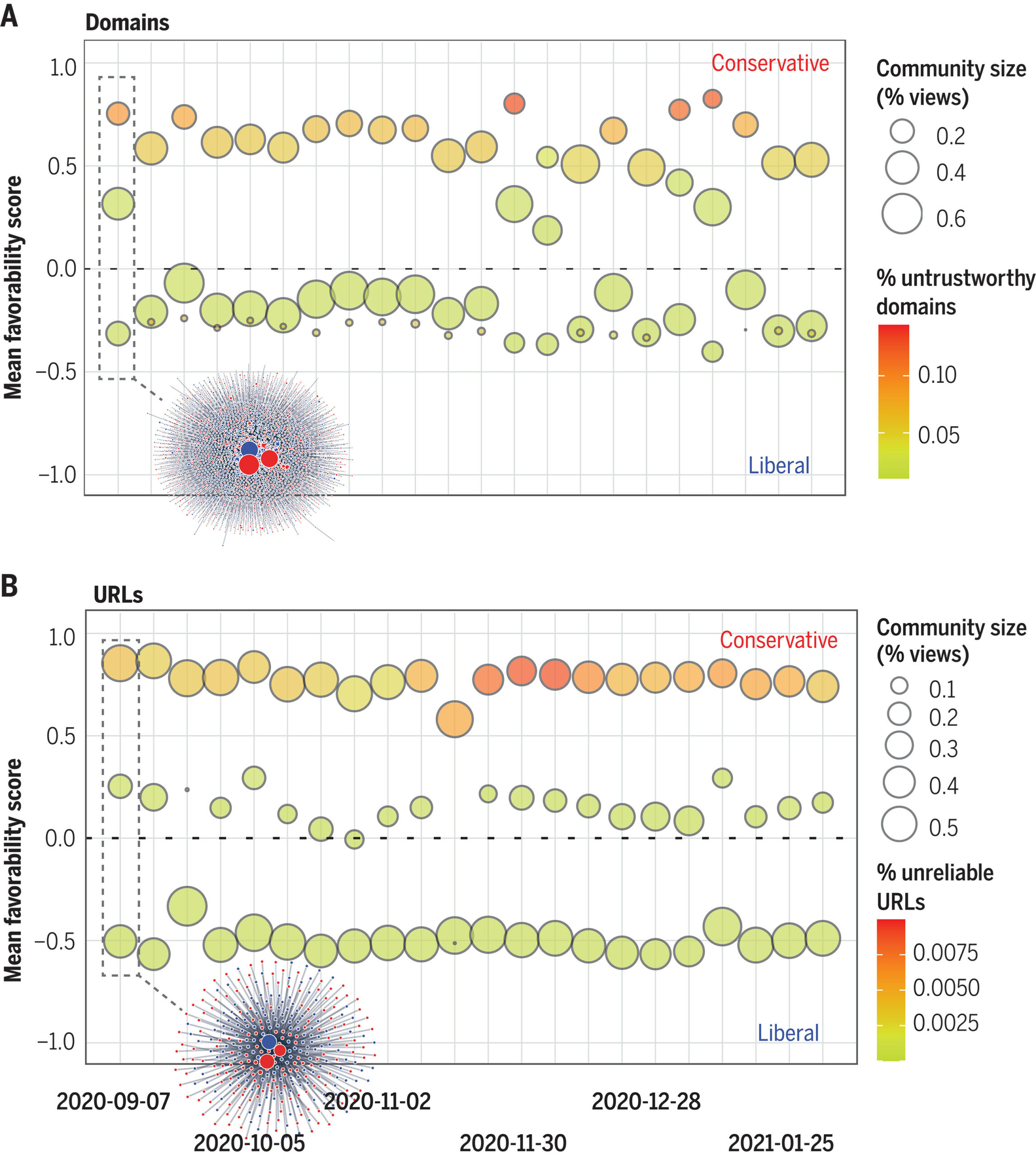

A pool of 27 independent researchers analyzed Facebook data for 208 million U.S. users during the 2020 election. They examined the full universe of news stories people could see in their feeds. Then they compared this to the narrowed selection of stories Facebook’s algorithm showed them.

Facebook contributes overwhelmingly to the ideological segregation and political polarisation of society

The researchers found high levels of “ideological segregation” on Facebook. Conservatives tended to see news catering to their views. The same for liberals. This segregation grew stronger as stories moved from potential exposure to actual exposure.

Political polarisation is the divergence of political attitudes away from the center, towards ideological extremes. Most discussions of polarization in political science consider polarisation in the context of political parties and democratic systems of government. (Source: Wikipedia English)

There was also striking asymmetry between left and right. A sizable segment of conservative news was isolated and consumed only by right-leaning audiences. No equivalent bubble existed on the left.

The researchers also looked at misinformation – stories flagged as false by Facebook’s fact-checkers. Nearly all these bogus stories resided in the uniquely conservative bubble.

In other words, conservatives on Facebook inhabit an alternate media universe to a greater extent than liberals. Their feeds promote partisan news – and sometimes fake news – more aggressively.

Top communities in coexposure networks.

Political polarisation taken to extremes by a cynical algorithm

To understand these findings, we need to grasp how news reaches Facebook users:

- The underlying network matters. Who you friend, follow and join shapes your potential exposure.

- Facebook’s algorithm then filters this network based on your interests. It elevates certain stories into your actual feed.

- You engage with some stories by reacting, commenting, and sharing. This signals Facebook what you like, driving the algorithm further.

Two other insights emerged:

- Pages and groups drive segregation more than friends. This suggests ideology plays a bigger role in choosing to follow pages/groups versus individuals.

- High-political interest users see twice as much segregation as low-interest users. They opt more into partisan echo chambers.

Tell me who you follow, and I will tell you who you vote for…ever

Past research using web browsing data found limited “filter bubbles” online. But this study suggests social media enables far more segregation in news consumption.

Browsing websites like “Fox News” can miss important differences in the specific stories users see. Granular data on news articles – not just outlets – is key.

The findings also underscore the asymmetric polarization of America’s media ecosystem. Conservative media nurtures a more cloistered audience than liberal media. On platforms like Facebook, this asymmetry gets amplified algorithmically.

Social media expands our information horizons. But the factions we prefer to follow can consolidate our prejudices and bias. Facebook’s algorithms cater to those biases, potentially fueling political tribalism.

Hordes of devotees feast daily on media lies, whatever the political party, and then share it with their peers, reinforcing its supposed importance. However, it’s worth reminding that popularity doesn’t imply validity.

Extremist political parties fuel the trend. For example, according to public ads spending data provided by Meta Inc, Belgium’s biggest spender in political ads is the Flemish independentist right-wing party, Vlaams Belang (directly or through its representatives). Millions of taxpayers’ money are funding political parties’ propaganda, ultimately fueling the American social network revenue – skipping local taxation thanks to its Irish tax haven.

The research published by Science highlights the need for transparency from social media platforms. Clearly, in addition to Facebook, there are other platforms. Still, Meta platforms are the most influential in political polarization, both for the number of users involved and for the effects of the algorithm that generates the personalized news feed for each user And for society to navigate the internet’s risks and rewards thoughtfully. Facebook alone won’t fix political divides, but understanding its impact is crucial to mitigate it; otherwise, future elections will be pointless.

The article was also published on DataDrivenInvestor via Medium.